Among the various worlds created in the Star Trek universe, few are as emblematic and disturbing as that of the Borg.

This species, a mixture of human and robot, merges technology and biology into a single collective entity: individuality is lost in favor of a collective mind. The Borg represent an existential threat to other species, as their philosophy is that of assimilation: every living being is integrated into the Collective, losing their identity and becoming part of a larger and more efficient system.

Resistance is futileThis phrase, obsessively repeated by the Borg, explicitly declares that opposing their assimilation is useless.

The Borg do not seek consensus or cooperation; they impose their will through force and superior technology. The Borg Queen, the central figure of their social system, represents the fulcrum of the collective: she is able to understand the needs of each individual and coordinate the actions of the Collective efficiently and precisely.

The idea of the Borg can be superimposed quite faithfully on the concept of a hive.

In the hive, every bee works for the good of the colony, sacrificing its own individuality for the success of the group.

In the Borg, this concept is taken to the extreme and proposed as the only possible way for survival and evolution.

The Borg Queen has a unique ability to coordinate the entire colony and direct the actions of individual drones efficiently and precisely.

The Borg in filmography and popular culture

The Borg first appeared in the television series “Star Trek: The Next Generation” in 1989 and have since become one of the most iconic enemies in the Star Trek universe.

I am the beginning, the end, the one who is many. I am the Borg.This phrase, spoken by the Borg Queen, summarizes the overpowering power and high self-regard that the Borg people have, represented by their own queen.

Let’s try now to step out of the Borg world and reflect on how this metaphor can be applied to the world of software development and how much we are inevitably becoming software development Borgs.

The dawn of assimilation: the era of generative AI in software development

If until a few years ago the relationship between developer and software tool was clear and defined, with the human conceiving the logic and writing the code that the machine executed, with the advent of generative AI the machine has reversed this dynamic.

What was once code written by humans is now generated by artificial intelligence models and, over time, the volume of code generated by AI will far exceed that written by humans.

This change radically alters the perspective, and the role of the programmer is one of those jobs destined, necessarily, to change radically.

The phrase “Resistance is futile”, spoken by the Borg Collective in the Star Trek universe, no longer appears as a dystopian threat from an imaginary alien species, but as an accurate description of current market dynamics and operational pressures weighing on global engineering departments.

Choosing to use a generative AI, in any form: a chat, an agent, a suggester hidden in the lines of an editor or a development environment, is no longer an optional choice. If before one could lightly choose not to use an AI, now it becomes increasingly difficult. This transforms the programmer into a hybrid, a being who integrates their cognitive capacity with that of a generative artificial intelligence model.

The motivations are concrete and not negligible: from the speed required by the company, to the quantitative improvement of the code produced, to the parallelization of actions even in the absence of personnel. These are not artificial pressures, but continuous demands of the modern market.

In a global AI market valued in the hundreds of billions of dollars (and the figure continues to rise impressively), the technological infrastructure has reconfigured itself around centralized models that dictate the pace, style, and substance of code production.

Micron abandons private individuals, Crucial now serves only large AI clientsCode language: PHP (php)Some tech giants are already adapting their offerings to exclusively serve AI needs, signaling a paradigmatic shift in the software development ecosystem: looking for a stick of RAM or an SSD unit in this historical period for personal use has become almost a luxury, which only some companies ravenously hungry for computational resources to fuel their artificial intelligence models can afford.

We are therefore moving towards an era where software development requires deep integration with generative AI, but generative AI is managed by centralized foundation models that dictate the rules of the game.

Anthropic, currently the most used tool in the software development field, both directly and indirectly through the numerous integrations of its models, has stated that it expects to reach the internal goal of an annual revenue of 9 billion dollars by the end of 2025 and to exceed 20 billion dollars the following year in the base scenario, with a potential upside of up to 26 billion dollars.

Other models like Google’s Gemini and OpenAI’s ChatGPT are following similar growth trajectories, consolidating their dominance in the generative AI market.

To these few American companies we can add some Chinese giants, but we are still talking about an oligopoly of a few actors who control the majority of the generative AI market.

When speaking of AI for software development, the models that dominate the market are truly few: it is true that open source alternatives exist, but their quality and capacity are still far from those of proprietary models.

The Borg and their queen

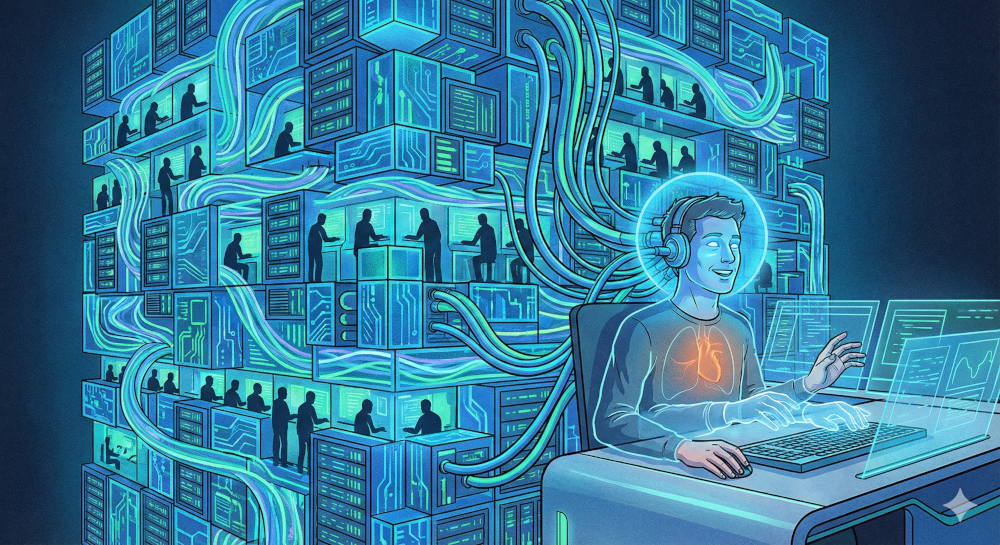

Just as the Borg depend on their queen to coordinate the actions of the collective, modern developers are becoming increasingly dependent on centralized foundation models to guide their daily work.

The risk of such strong adoption of generative AI technology in software development is that a structural dependency similar to that of the Borg is being created, where individuality and personal competence are sacrificed on the altar of collective efficiency.

The problem of deskilling mainly concerns those who start their career by completely delegating code writing to AI, without ever developing a deep understanding of the fundamentals. A senior developer who uses AI to accelerate repetitive tasks or generate boilerplate maintains critical control over the process; a junior who has never manually debugged a segmentation fault or optimized a slow query risks becoming a superficial operator, unable to intervene when AI fails or produces suboptimal solutions. Over time, however, the problem can manifest itself even at higher levels, when dependence on AI becomes so ingrained as to erode the capacity for critical thinking and autonomous problem-solving.

The economic context of assimilation

According to some statistics, the adoption of AI tools has now reached a penetration of 90% among technical developers globally, with daily or weekly use exceeding 80%. We are not facing a novelty or a luxury, but an essential component of the technical workflow.

Companies are investing massively in AI infrastructures, both at the software level and, impressively, also at the hardware level with ever new and more powerful data centers: the illusion of being able to do it alone is rapidly fading. There is a real risk that open source models will fail to keep up with the speed of innovation and distribution of proprietary models, leading to an almost total dependence on the latter.

Even when there is resistance to the use of generative AI, motivated by the quality of the software produced, it is still used to supervise processes, analyze data, improve the production of manuals and translations.

The Borg Queen and cognitive control

The Borg Queen is not a political monarch, she is an entity that has the order to govern chaos. The queen filters the thoughts of the Borg and directs their will towards perfection.

If we think about it, in the software context this function is assumed by large language models: centralized entities that suggest best programming practices, based on what they have learned and that guide and influence architectural decisions like the use of Vue or Next.js, Python or Go, microservices or monoliths.

Unlike the open source era, where knowledge was distributed horizontally through forums, documentation, and decentralized repositories, the AI era is characterized by radical centralization.

I use Zig because Claude Sonnet said soCode language: PHP (php)When a developer queries the AI, they are not accessing objective knowledge, but a probabilistic synthesis derived from the weights of the central model. This model resembles the Borg Central Plexus, the entity at the center of the Borg Cube, whose task is to aggregate all its drones and connect to other Borg ships.

This dynamic creates a critical dependency. Decisions on how to structure a class, which library to use, or how to handle security are no longer the result of individual deliberation or peer debate, but are suggested (and often accepted uncritically) by the central algorithm.

Given how current LLMs are built, trained on mountains of public code, it is much more likely that widely recognized patterns emerge rather than niche or innovative approaches. The developer-drone becomes a mere executor of the algorithmic will, a channel through which an algorithm manages to manifest itself in the physical world.

How many times have you deleted and rewritten code generated by AI because “it wasn’t what you wanted”? How many times have you passively accepted the AI’s suggestion without questioning it?

If the number of times you accepted is higher than the times you rewrote: there, you are about to be assimilated.

Shadow AI and structural dependence

Centralization brings with it the risk of what is defined as “Shadow AI” and ungoverned implementations. Just as the Queen can direct the Borg for specific tasks, the unregulated use of LLM models creates opaque data flows within organizations: looked at individually they may seem like correct actions, but totally missing the overall vision, companies lose the boundaries of their sovereignty and are subjected to uncontrollable external influences.

The laziness of models

In recent months, a behavior of some models that refused to work on certain tasks, limiting their results or truncating them, had become famous: PANIC.

Many programmers, by now accustomed to outsourcing their work, suddenly found themselves thrown into the cosmic void of the world without AI. They were no longer ready to autonomously face the compiler, to fix bugs, or to implement new code without asking a machine for help.

This incident highlighted how much the tendency to delegate is undermining the ability of many developers to operate autonomously.

We are creating a systemic cultural risk

One of the greatest fears, when designing complex structures, is that of having a point within the architecture that, in case of error, undermines the entire process (there are those who think of AWS or Cloudflare at this moment and those who lie).

The real risk is not so much technical as cultural: if entire generations of developers get used to accepting suggestions without rigorous checks, if CI/CD pipelines become imposed bureaucratic formalities rather than quality barriers, if code reviews are reduced to “AI wrote it so it must be right”, the average quality of software could progressively degrade.

Certainly, defenses exist: automated tests, static code analysis, human reviews. But these defenses work only if maintained by developers who deeply understand what they are verifying. If fundamental understanding is eroded by dependence on AI and the one generating the code is also the one verifying it, the risk of a systemic collapse increases.

If the problem of SQL injection is so widespread globally, why do we think that an LLM, trained on a mountain of vulnerable code, cannot reproduce the same vulnerability which statistically is the most probable code to generate?

The productivity paradox

The guiding principle of the Borg is absolute efficiency. I believe I have already spoken about the problem of efficiency at all costs, but talking about it again never hurts. In software development via AI, there is an undeniable measurable advantage: what previously took an hour can now be done in minutes. This gain is real and precious.

However, the nature of the saved time must be analyzed carefully. Before, time was dedicated to coding and testing; now it is dedicated to understanding the generated code and verifying that what is produced is actually compliant. Time is saved in the writing phase, but if we consider the entire life cycle of the code, the advantage can reduce significantly.

More and more developers describe themselves as vibecoders:

I write a prompt and the AI does everything for meDelegating low-level tasks is a natural evolution of computer science, which has always moved towards abstraction. The problem is not delegating for efficiency, but losing the ability to intervene manually when necessary: activities that sooner or later will present themselves, because no artificial intelligence model is infallible.

When the developer stops understanding the underlying mechanisms, they lose contact with the operational reality of the software. When they find themselves (and sooner or later it will happen) having to solve a complex problem that the AI cannot handle, they will no longer have the mental tools to do so and will find themselves facing hundreds of lines of code that they do not fully understand.

Like a Borg drone repairing the hull without understanding the physics of warp travel, the modern developer risks becoming a superficial operator of a logic they no longer control.

When should we be afraid?

Dependency is the biggest problem of every technology: once my colleagues told me that without Google I didn’t even know how to turn on the computer; in the future, if we continue to delegate blindly and inconsistently to AIs, programmers will no longer know how to write “Hello World”, provided that this thing still makes sense.

In 2025, however, we are already observing the signs of this drift: developers start to feel blocked or slow if they don’t have GitHub Copilot available due to a service outage:

Copilot is down: let's go get a coffeeCode language: JavaScript (javascript)Doesn’t sound so strange, right?

Let’s overcome the Borg Cube logic

The analogy with the Borg offers us an out-of-the-box way to examine our technological trajectory. We are building a system of exceptional power, capable of performing complex tasks with speed superior to that of a human. For now, these systems are imperfect, but over time they will become better and more efficient.

Here lies the fundamental paradox: the adoption of generative AI is now inevitable for those who want to remain competitive in the job market. Traditional “resistance” is truly futile. But how we adopt it is not predetermined.

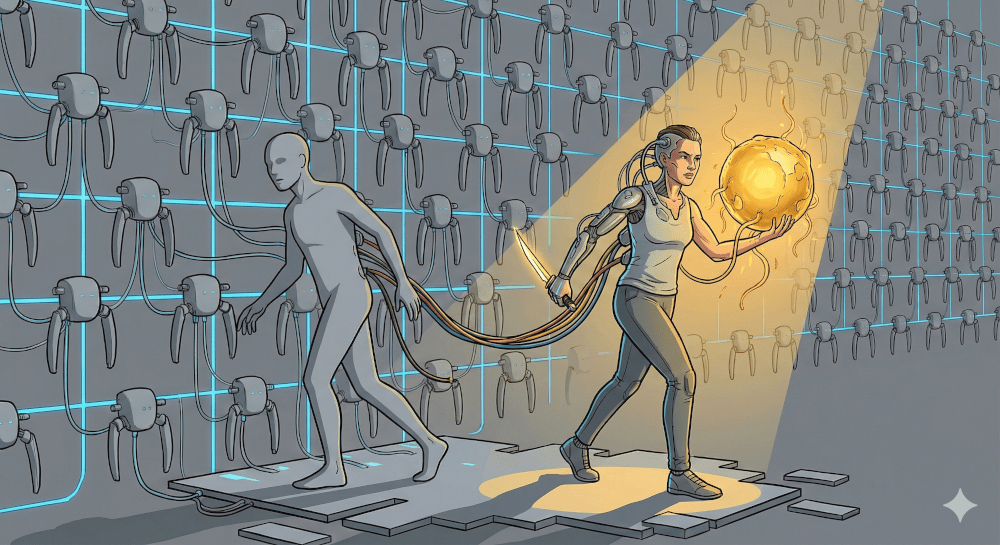

The real choice is not between using or not using AI, but between conscious integration and passive dependence. We can become like Seven of Nine, the Star Trek character who maintains her own identity while having integrated Borg technology, or we can be simple drones without critical will.

Let’s not lose the practice of programming, keeping a percentage of development free from AI aids so as to maintain problem-solving skills.

Let’s review the code understanding its logic: accepting a pull request just because it doesn’t cause tests to fail is not a sufficient practice to accept code into the main branch.

Let’s not bind ourselves to a single product, mixing closed and open source products: putting multiple ideas in competition can only be good for our projects.

If we allow centralized models to dictate not only the syntax but also the logic and ethics of our code, we risk waking up in a future where programming is just writing increasingly banal prompts, while the AI fixes everything for us.

The real resistance is not refusing technology, but refusing to become uncritical operators. In a world where artificial intelligence pervades every aspect of development, the individual voice of the developer, imperfect but capable of genuine intuition and creativity, still has irreplaceable value.

The data of 2025 tells us that assimilation is already underway. The question is no longer whether we will become human-AI hybrids, but how we will become so while keeping our critical capacity intact.

The history of the Borg teaches us that a collective can be perfect, but it is also terribly silent, and in silence innovation dies.