In traditional software development, JSON (JavaScript Object Notation) is the undisputed king. It’s readable, structured, and universal. But here’s the uncomfortable truth nobody told you when you started building with AI: LLMs don’t care about the “purity” of your data structure; they care about tokens.

If you’re still sending and receiving large JSON blocks to models like GPT-4, Claude 3.5, or Llama 3, you’re wasting semantic bandwidth, increasing latency, and literally burning your budget.

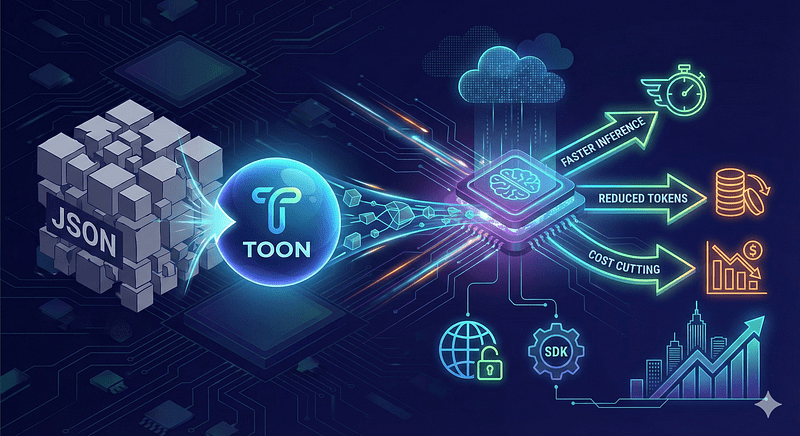

Recently, TOON (Token-Oriented Object Notation) was launched as a compact, human-readable encoding that minimizes tokens while making structure easy for models to follow. The industry hasn’t stopped talking about it. TOON replaces plain JSON in prompts and payloads for LLMs, reducing tokens, accelerating inference, and cutting costs. The specification and SDK are publicly available and show practical improvements for production.

TOON vs JSON in LLMs: Why Does It Matter?

TOON is a compact, schema-aware notation designed specifically to feed language models with structured data more efficiently than traditional JSON. It’s not just a syntax shortcut: it’s a representation designed to minimize redundant tokens while maintaining human readability and compatibility with schemas and validations.

TOON has arrived to eliminate the fat and leave only the muscle. Designed specifically for LLM inference, TOON prioritizes semantic density over syntactic rigidity.

The Problem: JSON’s “Syntax Tax”

To understand why JSON is inefficient for AI, we must first understand how models “read”: Tokenization.

LLMs use tokenizers (like BPE — Byte Pair Encoding). Every character counts, but “common” characters are grouped together. However, JSON is full of characters that are “poison” for token efficiency:

- Double quotes (“”): repeated thousands of times for keys and values

- Braces and brackets ({}, []): rigid structure

- Whitespace and line breaks: visual formatting that consumes context

In an average RAG (Retrieval-Augmented Generation) prompt, JSON syntax can occupy between 15% and 25% of your total tokens. That’s 25% of your context window you’re not using for reasoning or data.

What Makes TOON Different?

TOON combines YAML’s indentation-based structure for nested objects with a CSV-style tabular layout for uniform arrays. Here’s what sets it apart:

- Redundancy elimination: TOON discards quotes in keys and uses delimiters that are usually single tokens in most model vocabularies (like

|or minimal indentation) - Array compression: instead of repeating structures, it uses implicit schema definitions that the LLM naturally understands

- Token-friendly: designed to align with how BPE tokenizers group words

Practical Example: TOON vs JSON in LLMs

Let’s look at a simple user object example.

The JSON approach:

[

{

"id": 101,

"name": "Ana García",

"role": "admin",

"active": true

},

{

"id": 102,

"name": "Beto Pérez",

"role": "user",

"active": false

}

]

Code language: JSON / JSON with Comments (json)Approximate cost: ~55 tokens (depending on tokenizer).

The TOON approach:

users[2,]{id,name,role,active}:

101,Ana García,admin,T

102,Beto Pérez,user,F

Approximate cost: ~25 tokens.

Result: A 54% reduction in token usage for the exact same information.

Impact of TOON vs JSON in LLMs on Tokens and Costs

In initial tests and technical reports, TOON reaches 74% accuracy (versus JSON’s 70%) while using approximately 40% fewer tokens in mixed-structure benchmarks across 4 models. For systems at scale, those percentages translate directly into substantial operational savings and higher throughput per inference instance.

The benefits are immediate and tangible:

Ultra-low latency: LLMs generate text token by token. If you reduce the necessary output by half (eliminating JSON syntax), your response reaches the user twice as fast. In voice or real-time chat applications, this is the difference between a smooth experience and a frustrating one.

Budget savings: If you pay per million tokens (input and output), and TOON reduces your payload by an average of 30-40%, you’re reducing your AI infrastructure bill by almost half simply by changing the serialization format.

Expanded memory (context window): By freeing tokens from junk syntax, you have more space in the context window for what really matters: chat history, reference documents, and few-shot prompting.

How to Get Started with TOON

Implementation is surprisingly straightforward, as modern LLMs are smart enough to understand the format with a simple system instruction.

Suggested System Prompt:

“From now on, don’t respond in JSON. Use TOON format to maximize token density. Structure data using schema headers defined by # and separate values with |.”

Parsing libraries for Python and Node.js for TOON (@toon-format/toon) are already appearing on GitHub, allowing you to transform LLM output back into usable objects in backend code.

How to Integrate It into a Pipeline (Practical Steps)

- Map your JSON schema to a TOON version: prioritize fields that repeat most (IDs, keys, large arrays)

- Use the official SDK to serialize/parse and validate against schemas before sending to the LLM; this prevents format errors in production

- A/B Benchmark: compare tokens per request, latency, and cost per 1,000 requests; also measure impact on response quality

- Gradual rollout: start with example prompts and detailed logs to detect semantic degradations

Example Implementation

import { encode, decode } from "@toon-format/toon";

const data = {

users: [

{ id: 1, name: "Alice", role: "admin" },

{ id: 2, name: "Bob", role: "user" }

]

};

const toonData = encode(data);

console.log(toonData);

// Output:

// users[2,]{id,name,role}:

// 1,Alice,admin

// 2,Bob,user

Code language: JavaScript (javascript)from toon import encode

data = {

"users": [

{"id": 1, "name": "Alice", "role": "admin"},

{"id": 2, "name": "Bob", "role": "user"}

]

}

print(encode(data))

# Output:

# users[2,]{id,name,role}:

# 1,Alice,admin

# 2,Bob,user

Code language: PHP (php)Risks and Limitations

Compatibility: Some parsers and tools expect JSON; you need a conversion layer in the backend. However, the official TOON implementations provide this out of the box.

Serialization errors: Syntactic compression can hide bugs; validate with schemas and unit tests.

Quality vs compression: In cases with deeply nested or non-uniform structures, JSON may be more efficient. TOON excels with uniform arrays of objects but isn’t always optimal for every data shape.

When NOT to use TOON:

- Deeply nested or irregular data structures

- Non-AI use cases where JSON tooling is essential

- Semi-uniform arrays (40-60% tabular eligibility) where token savings diminish

The Verdict

Continuing to use JSON for LLMs in 2025 is like trying to send a fax using an iPhone. It works, but you’re wasting all the potential of the technology.

TOON isn’t just a format; it’s a statement of principles about efficiency in AI. TOON’s sweet spot is uniform arrays of objects (multiple fields per row, same structure across items), achieving CSV-like compactness while adding explicit structure that helps LLMs parse and validate data reliably.

If you’re serious about your application’s speed and cost optimization, it’s time to let go of the curly braces {} and embrace density.

Are you ready to reclaim your tokens?