Distributed cache can improve the responsiveness of today’s high-performance web and mobile apps. At heart it’s a simple concept, but, when used well, it can bring improved capacity and flexibility to networked systems. As well as classic caching scenarios, distributed cache systems like Redis offer an alternative to standard databases when handling working data.

In this article we’ll look more closely at distributed cache and how it can be used. And we’ll share some insights from Insoore to help you optimise your own apps and services.

The ABC of distributed cache

The use of caching is well-known to preserve the stability and performance of web apps and websites. Resources that are served regularly are stored in pre-generated forms to reduce database hits and loads on internal networks. Whether served from the filesystem or memory, such mechanisms can significantly improve the capacity and speed of data-intensive applications. Distributed caching is basically an extension of this principle.

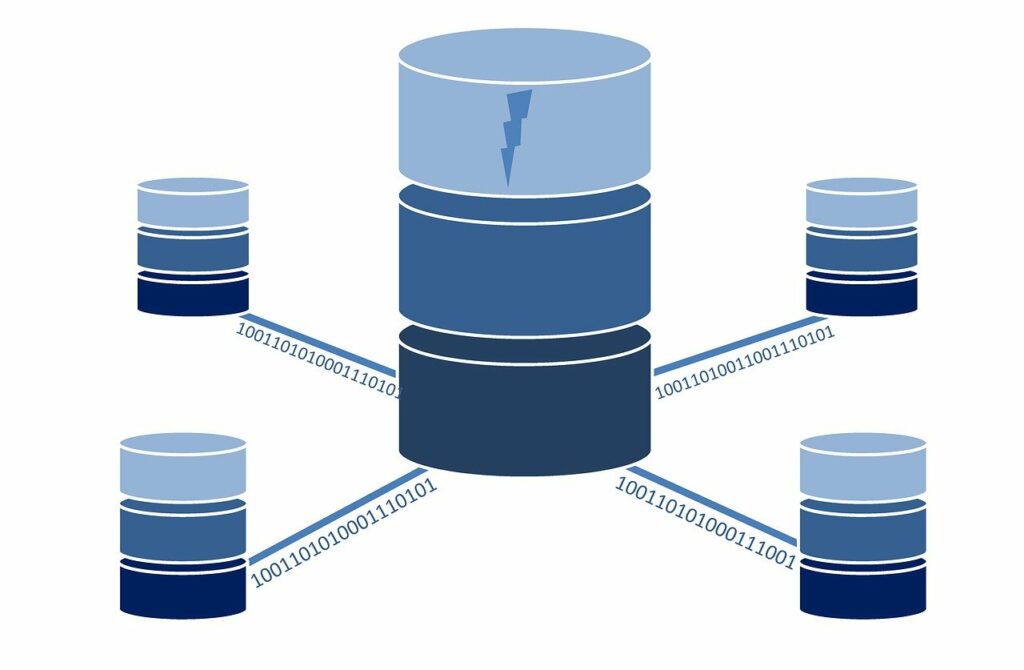

Where standard caching stores resources locally, a distributed cache pools the RAM of multiple connected devices. This overcomes the inherent limitations of single-machine-based caching strategies. Distributed cache is typically used for data-driven apps where performance and scale are significant factors.

Distributed cache vs. database

Without caching, data access typically requires a structured database of some form. Databases allow data to be stored securely and reliably, using the ACID principles to ensure data consistency. However, database reads and writes require disk operations, with associated network and hardware overheads. Distributed cache allows quick reads of commonly used data directly from memory, avoiding this I/O latency.

Distributed cache also achieves efficiency savings in other ways. It doesn’t require query parsing and syntax validation. Checks on database authorisation and permissions can be bypassed. What’s more, distributed cache data can be optimised by data access plans developed to meet predicted usage patterns. Caching also allows greater concurrency for read-only operations as locks or integrity checks are not needed.

What is Redis?

Redis is an in-memory data store that can serve as the basis for distributed cache operations. For applications where fast performance, scalability and network optimisation are key, Redis is ideal. The in-memory nature of its data operations makes it much faster than conventional databases’ read-write processes and by making use of distributed architectures, it can cut out disk-based persistence for real-time functionality. However, that doesn’t mean your data is precarious. Redis features a number of persistence options, including a Redis database that performs periodic snapshots in case of system failure.

Redis is also highly versatile and is used for functionality like streaming and message brokers. It allows the use of native datatypes without the need for conversion. Strings, hashes, lists, sets, streams and much more are all supported. Redis has its own DSL, Lua, for server-side scripting, making it highly programmable and flexible. It is modular and extensive, and you can write your own modules in C, or with C++ and any other language that supports C binding functionalities.

Applying distributed cache: use cases and examples

A few example use cases give a window into just how powerful Redis can be:

- Application acceleration. By maintaining data in memory, Redis reduces the need for disk-based operations. This significantly lowers the latency bottleneck caused by I/O operations.

- Decreasing network usage. Cached data can be stored in cloud locations proximate to requesting clients. Recourses to master databases are massively reduced, lowering network load and lightening service providers’ bandwidth charges.

- Web session data. Redis can store large amounts of concurrent web session data – again without recourse to databases. This improves performance and makes load balancing more straight-forward.

- Scaling. Data-intensive applications don’t always scale easily. However, Redis makes scaling easier by enabling resources across multiple servers.

Real-world use case scenarios

To get some deeper insights, let’s dig down into the specifics of some use cases from Insoore.

Time-limited process

The engineers at Insoore use Redis to manage the temporal validity of user operations. For example, a user may be required to perform a particular operation within 24 hours of a request. To trigger this, a link is generated and sent via email or SMS:

var link = $"{_externalCaiPageConfiguration.Url}?id{resultSinistro.Entity.Id}";

Code language: JavaScript (javascript)This data is stored in the Redis cache using the TimeValidity feature:

await _outputCacheStore.SetAsync(key, cd.ToByteArray(), null, linkTimeValidity, cancellationToken);

Code language: JavaScript (javascript)When the user responds to post the requested information, the Redis cache is checked for the given key:

var cached = await _outputCacheStore.GetAsync($"IXB_{request.Key}", cancellationToken);

ArgumentNullException.ThrowIfNull(cached);

Code language: JavaScript (javascript)If the key has expired, the operation is blocked.

Api OutputCache

Insoore use cache management to streamline api calls using the .NET 7 framework. This introduces a new feature that allows API caching using a MemoryOutputCacheStore. To activate it, services are configured with services.AddOutputCache(); on registration and then enabled with app.UseOutputCache();

From thereon, .CacheOutput() can be specified in the API Get methods:

app.MapGet($"{Name}/{{id}}",

[Authorize(Roles = Rule)]

[SwaggerOperation(Summary = $"Get the entity by the id")]

async ([FromRoute] int id, ISender sender, HttpContext context)

=> await sender.Send(new GetRequest<TInterface> { Id = id, RequesterId = context.GetId() })

).WithTags(Name)

.CacheOutput(x => x.Tag($"{Name}Get"));

Code language: JavaScript (javascript)To invalidate the cache, they use await cache.EvictByTagAsync(cacheKey, cToken):

app.MapDelete($"{Name}/{{id}}",

[Authorize(Roles = Rule)]

[SwaggerOperation(Summary = $"Delete", Description = "Allow to delete the entity")]

async (int id, IOutputCacheStore cache, CancellationToken cToken, ISender sender, HttpContext context) =>

{

await cache.EvictByTagAsync(cacheKey, cToken);

return await sender.Send(new DeleteRequest<TInterface> { Id = id, RequesterId = context.GetId() });

}).WithTags(Name);

Code language: JavaScript (javascript)However, while this works, there is a problem. To store its entries, MemoryOutputCacheStore uses a MemoryCache which is “basically a dictionary”. This can lead to problems when scaling. For example, consider 2 instances of the same API, A and B. Instance A caches an entity. Instance B invalidates the cached entity, updates it and caches it again. But now A and B have different versions of the cached entity. To solve this, Insoore used Redis to create a customised OutputCachestore that synchronises cache updates with just a few lines of code:

public class RedisOutputCacheStore : IOutputCacheStore

{

private readonly IConnectionMultiplexer _connectionMultyplexer;

public RedisOutputCacheStore(IConnectionMultiplexer connectionMultiplexer)

{

_connectionMultyplexer = connectionMultiplexer;

}

public static class RediOutputCacheServiceCollectionExtensions

{

public static IServiceCollection AddRedisOutputCache(this IServiceCollection services)

{

services.AddOutputCache();

services.RemoveAll<IOutputCacheStore>();

services.AddSingleton<IOutputCacheStore, RedisOutputCacheStore>();

return services;

}

}

}

Code language: HTML, XML (xml)The interface method is then implemented with Redis cache:

public ValueTask EvictByTagAsync(string tag, CancellationToken cancellationToken)

public ValueTask<byte[]?> GetAsync(string key, CancellationToken cancellationToken)

public ValueTask SetAsync(string key, byte[] value, string[]? tags, TimeSpan validFor, CancellationToken cancellationToken)

Code language: PHP (php)Api Auth Policies

Sometimes it’s necessary to control access to API methods. Access rules are stored in a database, but if these need to be queried to validate each API call, the benefits of caching are lost. To maintain consistency, Insoore stores these rules in a Redis cache:

var ruleSet = await _context.BloccoAccesso.Where(x => x.UtenteId == utente.Id).Select(x => x.MapTo<BloccoAccessoDto, BloccoAccessoDao>(x)).ToListAsync();

var key = $"IXB_Auth_{utente.Id}";

await _cache.SetAsync(key, ruleSet.ToByteArray(), null, TimeSpan.FromMinutes(TimeValidity), cancellationToken);

Code language: JavaScript (javascript)They have also created custom Middleware to process the authorisation request: app.UseMiddleware<UtenzeAuthorizationMiddleware>();. This method retrieves rules relating to the current user from Redis, and if there are any blocking rules for the requested API method, the request is denied:

var key = $"IXB_Auth_{userId}";

var path = httpContextAccessor.HttpContext?.Request?.Path.Value;

var method = httpContextAccessor.HttpContext?.Request?.Method;

var cachedRules = await cache.GetAsync(key, new CancellationToken());

var rules = cachedRules?.Deserialize<List<BloccoAccessoDto>>();

if (rules?.Any(x => x.Match(method, path)) ?? false)

{

await new UnauthorizedAccessException($"User:{userId} Method:{method} Path:{path}").ManageHttpException(_logger, context);

return;

}

Code language: JavaScript (javascript)Best practices and tips for distributed cache

To finish, let’s take a look at some best practices for Redis and distributed cache management.

- Leverage indexes. The key-value paradigm is central to Redis, but it’s important to use indexing and sorting strategies that match your data types to get the best performance. For example, you may choose sorted sets as indexes, geospatial entities, IP addresses, lexicographic encoding or full-text indexes.

- Manage data storage. Not all data is simple to put in and pull out of structures. In such cases, you can serialise your data and represent it using JSON, but this can be inefficient. A faster alternative is to RedisJSON to manipulate complex datatypes.

- Store images and large files in memory. While you may be tempted to reference images by file paths, this is inefficient and doesn’t make the best of distributed cache’s potential. However, Redis allows you to use binary formats directly without conversion, which will be much more efficient.

- Use the right modelling for time series data. Depending on your application, you can use different access patterns to order data temporally. Choose sorted sets, lexicographic sorted sets or bitfields as appropriate.