Dr. Ric. Luca Pedrelli received his master’s degree in computer science from the University of Pisa in 2015 and his doctorate in computer science from the University of Pisa in 2019. He is currently working as a research fellow with an interest in deep neural networks. He is based at the Computer Science Department of the University of Pisa within the group. of Computational Intelligence & Machine Learning (CIML) research. He also teaches deep learning at the University of Pisa and in post-graduate specialization courses.

Luca’s research areas include:

- The analysis and development of Deep Neural Networks in Machine learning, time series and sequence models

- A novel class of random neural networks (RNNs)

- Developing analysis tools for RNNs

- Deep neural networks and robotics

Deep learning models are typically characterized by layers of neurons. Each layer provides a more abstract representation of the input information. This allows the model to solve a global task by addressing a progression of increasingly abstract problems. In the case of time-series processing, deep recurrent architectures are able to develop a multiple time-scales representation.

Luca’s talk presents Deep Echo State Networks as a tool to analyze and design efficient deep recurrent architectures for real-world tasks concerning time-series and sequence modelling. It’s a deep dive with plenty of equations and deep level theory and analysis, we aim to provide an outline of just some of the areas covered in this article, the accompanying video below will provide you with a more complete and comprehensive learning opportunity.

Sequence and Time-series Tasks

- In sequence processing tasks, a sequence is a set of elements with sequential dependencies;

- Time series are characterised by multiple time-scales dynamics

- Signals are characterized by different frequency components

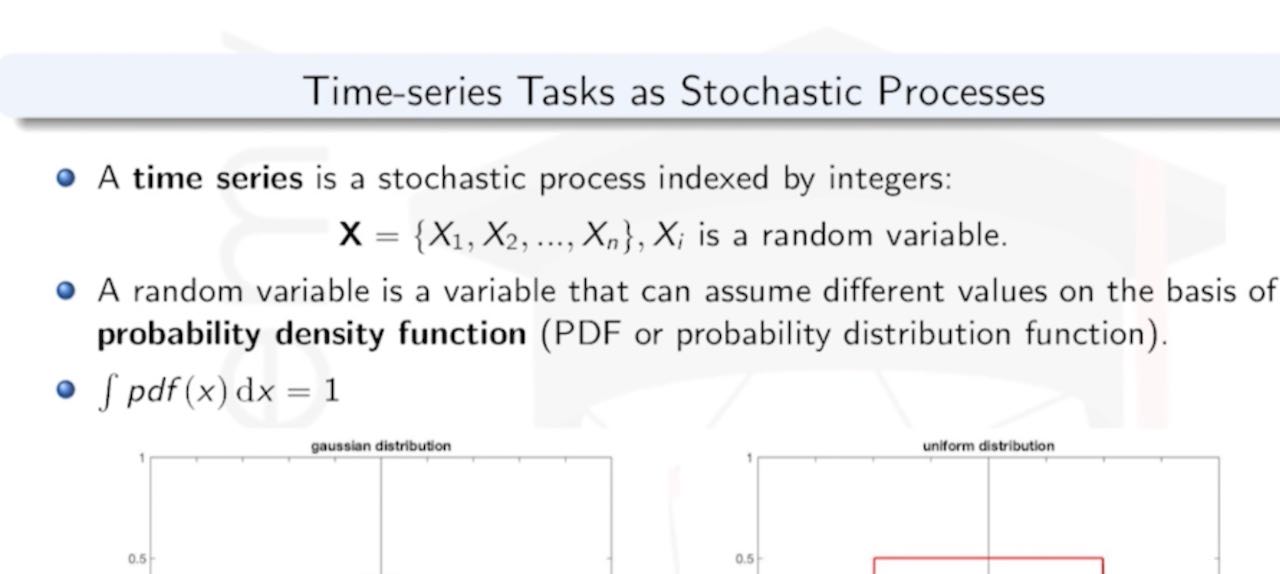

Time series tasks as stochastic processes

A common approach in the analysis of time series data is to consider the observed time series as part of a realization of a stochastic process. A real stochastic process is a family of real random variables 𝑿={xᵢ(ω); i∈T}, all defined on the same probability space (Ω, F, P). The set T is called the index set of the process. If T⊂ℤ, then the process is called a discrete stochastic process. If T is an interval of ℝ, then the process is called a continuous stochastic process.

Deep neural networks vs Shallow neural networks

A deep neural network is a neural network with a certain level of complexity, a neural network with more than two layers. Deep neural networks use sophisticated mathematical modeling to process data in complex ways. Deep neural networks are networks that have an input layer, an output layer and at least one hidden layer in between. Each layer performs specific types of sorting and ordering in a process that some refer to as “feature hierarchy.” One of the key uses of these sophisticated neural networks is dealing with unlabeled or unstructured data.

A shallow neural network consists typically one hidden layer where as a deep neural network consists of more hidden layers and has a higher number of neurons in each layer.

While deep models are characterized by a stack of non-linear transformations represented by a hierarchy of multiple hidden layers, shallow models are typically composed by a single hidden layer. Both kinds of models are universal approximators of continuous functions. However, deep models have better abilities in providing a distributed, hierarchical and compositional representation of the input information.

Why deep neural networks?

- Deep neural networks obtain the state of the art results in a real-world application, such as computer vision, natural language processing, and mastering complex games just as Chess and Go.

- They typically obtain a better performance compared.to shallow models

- Universal approximations: , they approximated better their continuous functions compared to a shallow model

- Suitable for GPU optimization.

Open issues in Deep Learning

- It is not formally defined, what makes deep learning deep, what means the deep the mathematically

- How is information are presented?

- Why deep learning models achieve good performance?

- How many layers?

- How many neurons?

- Why should we use deep neural networks for time series?

- How a deep neural network develops the temporal features inside the layers?

Recurrent neural networks

Recurrent neural networks, also known as RNNs, are a class of neural networks that allow previous outputs to be used as inputs while having hidden states.

Reservoir computing and Echo state networks

Reservoir computing (RC) is a novel approach to time series prediction using recurrent neural networks. In RC, an input signal perturbs the intrinsic dynamics of a medium called a reservoir. A readout layer is then trained to reconstruct a target output from the reservoir’s state. The multitude of RC architectures and evaluation metrics poses a challenge to both practitioners and theorists who study the task-solving performance and computational power of RC. In addition, in contrast to traditional computation models, the reservoir is a dynamical system in which computation and memory are inseparable, and therefore hard to analyze.

Echo state networks (ESN) provide an architecture and supervised learning principle for RNNs. The main ideas are: 1) To drive a random, large, fixed recurrent neural network with the input signal, thereby inducing in each neuron within this “reservoir” network a nonlinear response signal. 2) to combine a desired output signal by a trainable linear combination of all of these response signals.

Deep Echo State Networks

The introduction of Deep Echo State Networks allows to analyze and understand the intrinsic properties of DeepRNNs, providing at the same time design solutions for efficient deep recurrent architectures.

DeepESNs foster the study and analysis of the intrinsic architectural properties of DeepRNNs. In particular, the hierarchical organization of recurrent layers enhances the memory capacity, the entropy (information quantity) and the multiple time-scales diversification. The use of a linear activation in L-DeepESNs facilitates the identification of features represented by DeepRNNs and to define an algebraic expression that characterizes the effect provided by deep recurrent architectures on the dynamics of the state. DeepESNs also provide efficient solutions to design DeepRNNs suitable for applications with temporal sequences characterized by multiple time-scales dynamics.

Luca was also available to answer attendee questions, be sure to take a look at the video above to find out more.