Over the last few months, I have shown you how Machine Learning at the edge is improving our lives. I also shared some practical examples to give you a chance to try it for yourselves. In this article, I look at the current limitations of edge ML and explore where the technology will go in the future.

The limitations of current edge devices

In the first article of the series, I showed why moving machine learning to the network edge makes sense. I showed how edge ML solves many of the practical limitations for machine learning.

For instance, it allows ML models to run without network connectivity, or where security issues limit network access. It also allows Machine Learning to be embedded into end-devices, such as headphones or car stereos. However, all these applications rely on the use of embedded MCUs or other low-power processors. This, in turn, imposes a real limit on the capabilities of edge ML.

Processing power of MCUs

Machine Learning at the Edge requires the use of devices that only draw small amounts of power. Moreover, the devices mustn’t overheat and can only be passively cooled. Just imagine wearing headphones that get uncomfortably hot, or need the use of a fan!

The problem is, there is a direct correlation between processing power, power drawn, and heat production.

Over the decades, chip manufacturers have tried various ways to improve this. These include miniaturisation of chip dies, splitting processing between different cores, and the use of co-processors. But the current generation of MCUs is still lagging behind.

32-bit limitations

One of the key ways to increase processing power is moving to 64-bit (or higher) architectures. These instantly bring increases in performance because the chip can do more processing in every clock cycle.

They also allow larger data structures to be stored in the cache, which speeds up many processing tasks. However, 64-bit processors are much more power-hungry than 16- or 32-bit ones. As a result, the majority of high-performance MCUs are 32-bit.

So, what are the practical implications of this? Firstly, it means data operations are slower, with data needing to be read to and from memory more often. Secondly, it limits the complexity of data structures you can create. As we will see, this causes problems for deep learning and other advanced forms of ML. Finally, it imposes an absolute limit on the addressable memory space. That, in turn, impacts its efficiency and power.

How this impacts machine learning at the edge

Machine Learning is a rapidly-evolving field. But creating ML models relies on high-power processors and specialised servers. This means the most advanced forms of ML are simply not possible at the network edge. Let’s look at some of the things that are not possible with the current generation of MCUs.

Unsupervised learning at the edge

The majority of standard Machine Learning models rely on supervised learning. That is, you take a large set of labelled data and use this to teach a model to identify useful patterns. The problem is, often there is little or no existing labelled data.

In such cases, data scientists turn to unsupervised learning. This seeks to identify interesting patterns in the data, rather than match patterns with labels.

This approach can be used in two ways. Firstly, you may simply want to identify important patterns in the data. These can help with tasks like classification or identifying anomalies.

Secondly, it allows you to identify important features in the data. You can then create a small labelled data set and combine this with supervised learning to generate a more complex model.

Unsupervised learning is hard to do on current edge devices because they lack the raw processing power. As a result, Machine Learning at the edge is limited to tasks where there is an existing supervised learning model.

Continuous learning

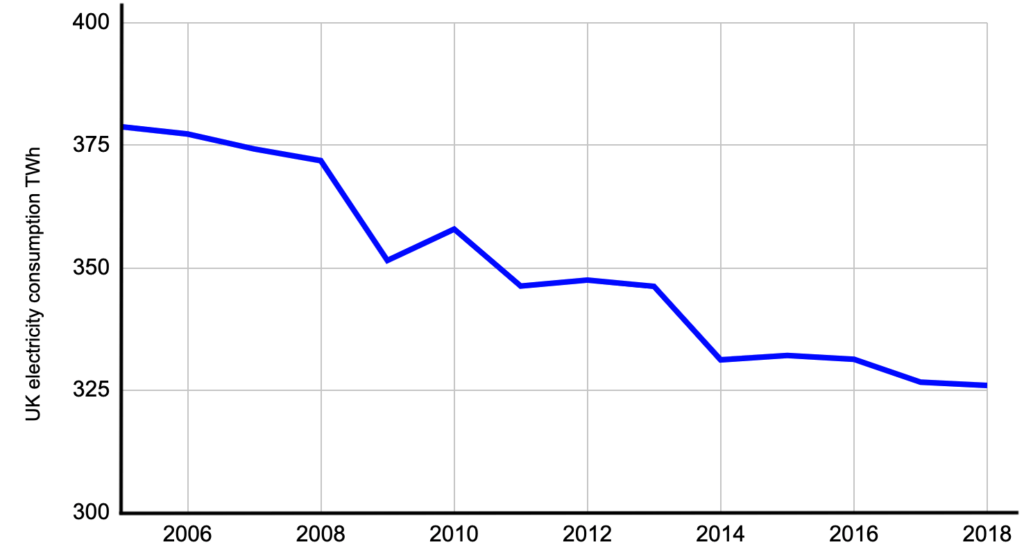

A supervised ML model is based on a fixed period in time. Imagine a model that predicts future electricity requirements based on historic demand and the current weather conditions. The model is probably quite accurate when it is first trained. But now fast-forward five years. New appliances are more efficient than old ones, leading to a reduction in power consumed.

Houses are better-insulated, meaning there is less need for heating in winter and cooling in summer. Also, people are ever more aware of energy conservation and seek to reduce their electricity use. As a result, your demand model is no longer accurate.

There are two ways to cope with this problem. The first is to simply replace the model periodically with one trained using the latest data. This works quite well, but only where the changes are gradual and where you can actually update the model.

The second is to use continuous learning. This is a relatively new field in Machine Learning that allows models to continually adjust themselves in response to the latest data. As a result, the model never becomes stale and out of date.

Deep learning at the edge

Deep Learning is one of the most powerful Machine Learning techniques. It uses deep neural networks to create extremely complex ML models. Some deep learning systems exhibit self-learning.

DeepMind, for instance, was able to learn to play the Chinese game of Go. Over a number of months, it iterated a huge number of models that played against each other. It used this to learn new moves while discarding less successful models. In the end, it was able to beat a human world champion Go player.

Deep Learning is only possible with extremely powerful hardware that is capable of a large number of parallel operations. Typically, it requires dedicated clusters of high performance servers, such as Microsoft Azure NC-series. It is certainly beyond the capabilities of low-cost, low-power edge devices.

Advances that will boost Machine Learning at the edge

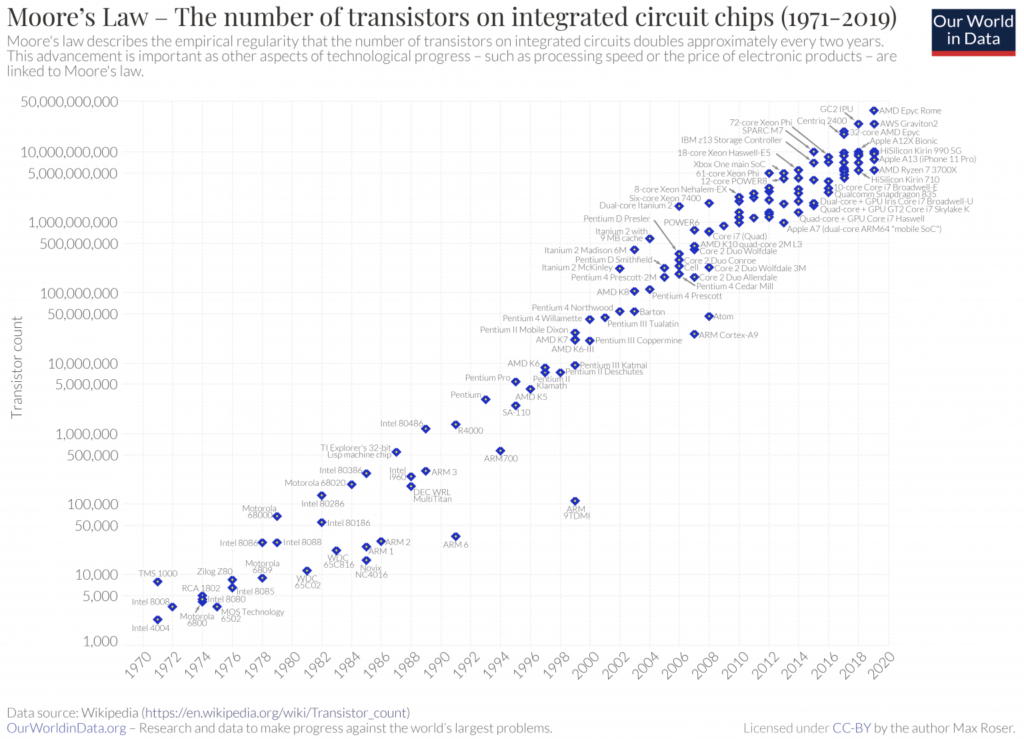

Fortunately, MCU architectures are advancing as fast as CPUs. That means they broadly follow Moore’s Law. This law is named after Gordon Moore, one of the cofounders of Intel. Moore posited that the number of transistors on a CPU would double every two years. This is roughly equivalent to a doubling in computational power every 18 months.

The image above shows how this law has held true over 50 years of CPU developments. If MCUs advance at the same rate, we will see rapid increases in capabilities over the coming years to the advantage of Machine Learning on the Edge.

64-bit architectures

64-bit ARM processors have existed for some time now, and are available with 10nm fabrication, which helps reduce their power consumption. As an example, the latest iteration of the ARM Cortex A-35 draws 32% less power than its predecessor and is 25% more efficient.

However, this is still a premium processor, costing hundreds of dollars. This means it is not yet suitable for mainstream edge ML applications. But just think what will be possible once these high-performance devices drop dramatically in price.

Increased performance for ARM

The majority of high performance edge devices rely on ARM architecture processors. This is because they combine high performance with lower power draw. The very latest ARM architecture chips, such as Apple’s M1, offer impressive processing capabilities, and can even use passive cooling.

However, they are also hugely expensive and relatively power-hungry by edge-device standards. But they show that chip manufacturers are still making real advances. Over time, we will see similar advances in the lower-power ARM cores that are used in high-end MCUs.

Embedded neural networks

The most advanced edge devices are already offering significant machine learning capabilities. Apple’s new M1 embeds their Neural Engine, which claims to offer 11 trillion operations per second. The Neural Engine is a specialised bit of silicon specifically designed to run neural network models.

Currently, such capabilities are reserved for high-end processors but in future they will become mainstream. The approach could also be ported to MCUs and other low-end devices. At that point, we will start to see true deep learning at the edge.

Hardware acceleration

DeepMind is one of the most complex deep learning systems to date. But smaller deep learning systems are routinely used nowadays for tasks like advanced image recognition.

However, even a relatively small deep neural network consists of large numbers of layers and many thousands of artificial neurons. Such models need significant parallel processing. This means they are usually run on highly specialised servers that use GPUs to boost their processing performance. This is known as hardware acceleration.

For edge devices, pairing low-power GPUs with MCUs could provide similar hardware acceleration capabilities. This would help edge devices harness deep learning and unsupervised learning.

Mobile phone chipsets already incorporate relatively powerful GPUs. After all, they often need to drive retina HD screens. Devices like Raspberry Pi show how such mobile phone chipsets can be repurposed as edge processing platforms. Raspberry Pi even offers a specific compute module designed to be embedded into devices.

What the future will hold for machine learning at the edge

So, what advances might we see once more powerful edge devices are available? Let’s look back at two of the applications from earlier articles and see how these will develop in future.

Computer vision

In the fourth article, I introduced computer vision at the edge. The system was relatively simple—it was able to recognise the presence or absence of a human face. I also mentioned that you can train your own model to be able to recognise other images. However, there are two fundamental limitations because of the lack of computing power in MCUs:

- Your model will only be able to recognise obviously different objects. For instance, it can distinguish a human face from the background. Or a dog from a cat. But it will struggle to identify a human from another human.

- The model must be trained on a separate system and then embedded into the MCU. This means the model cannot be easily modified or updated in the field.

Security companies already sell edge devices that are capable of facial recognition. But these are powered by CPUs or high-performance mobile phone chipsets. That makes them expensive.

In the near future, even cheap MCUs will be capable of this. And in time, they will be powerful enough to offer continuous learning, allowing them to constantly update their models.

Voice recognition

I also showed how Machine Learning on the edge is powering a revolution in voice control and voice recognition. However, current systems can only recognise a small number of terms.

In effect, they suffer from the same limitations as I described above. But what if the edge devices became more powerful? What might become possible in future?

- Voiceprint recognition. One definite advance will be the ability to recognise individual voices. Amazon’s Alexa already offers this feature, but Alexa devices rely on being connected to the cloud for this. In future, you will be able to train an edge device to recognise your voice, offering additional security for voice control systems.

- NLP at the edge. A key problem with voice recognition is actually understanding what you mean. The example we looked at before was only able to give a response to specific commands. But increasingly powerful MCUs will allow the natural language processing to happen within the edge device itself.

- Reinforcement learning. Voice recognition systems often mishear or misunderstand what we say. Reinforcement learning is a form of continuous learning where you can provide feedback to the model. This works much like teaching a child to ride a bike by trial and error. When the child gets it wrong, they fall off. Over time, they learn what made them fall off and eventually, they learn how to ride the bike. In the same way, you can teach a voice recognition system to learn when it makes a mistake, so it will improve over time. More powerful edge devices will soon offer this sort of capability directly.

Conclusions

Machine Learning on the edge is still in its early stages. Over the next few years it will become increasingly mainstream. Technologies that are currently only found in high power ARM CPUs will soon make their way into lower-cost MCUs. This will allow edge ML to offer capabilities that are equivalent to the best cloud-based systems.