Cloud Native is the modern architecture for the next 20 years of application development. Open Source technologies combined with cloud computing have defined a new platform allowing developers to rapidly build and operate high scale applications.

Michael Behrent is a Distinguished Engineer for Serverless / FaaS & IBM Cloud Functions Chief Architect for IBM spoke about the key role Kubernetes plays in this platform and the work underway to align 12-factor applications and serverless functions with Kubernetes through the Knative project. He also talk spoke service meshes with Istio and how developers need to think differently about continuous delivery, the development process and application architectures. You can take a look at the full presentation below:

The emergence of key platforms

In every era a new platform emerges and new platform architecture. The goal of such a platform is always to focus on core application logic and support the developer implementing their applications in not having to worry about everything that is unrelated to their business logic.

Michael believes that we are seeing the emergence of the next dominant platform, the 20-year platform. He explains, “In the early 2000s, we talked about interior architectures, multi-tier architectures. And we had mostly monolithic apps and the relational databases, the back end, that was the predominant architecture we’ve seen.

Around 2005 this was becoming fragmented with the entry of service orientation and a decoupling of components and Enterprise Service passes emerging, which allows a better decoupling of services.

This leads us to today and more of a microservices architecture which basically takes that concept of decoupled components, loosely coupled components, each of them being able to evolve at their own rate and pace to the next level.”

Circa 2000

- N-tiers were the application platform

- Usually based on a single language

- Driven by monolithic architecture

- Heavy opinionated application frameworks -JavaEE

Those application servers that were around at that point in time predominantly took care of all the non-functions like scalability, like availability, security, what we call today observability

After on-premise computing, enter the cloud

Today our designs are enabled by cloud computing, unlike the on-premise architecture of the 2000s.This means very highly distributed, many component architectures where we have to design much more for failure than we had in the past, some simply because of the statistics of what it means to have many components running at the same time and all of them have to be available.

According to Michael, “It’s more of a multi-language environment – driven by independent teams and desire to use the best tech for the job.”

It involves minimal application frameworks: “Most of the non-functionality that are provided are more embedded in a language-neutral way. But the platform will still provide the core functions around all the non-functionals that we already had in the application server days.”

Today: a Microservices platform aids cloud computing

There is a kind of microservices united platform available today, which is basically consisting of a container infrastructure with Kubernetes. Kubernetes is widely used and very dominant. It provides lots of non-functional support elements:

- Scheduling

- Self-healing if something breaks,

- Horizontal scaling.

- Service discovery assistance

But is Kubernetes alone enough for delivering such an architecture to build large scale robust production-level applications?

It’s a component but it’s not quite there. It requires troubleshooting:

- Security in the cloud and beyond

- Canary deployments

- A/B testing

- Retries if something fails and circuit breaking

- Rate limiting

- Fault injection

- Policy management

- Telemetry

In order to adjust the non-functional requirements, you need something like a service mesh that helps you with observability, resiliency, traffic control et

Istio can help

Istio is part of the Kubernetes ecosystem. It basically allows you to connect microservices in a loosely coupled way, to be flexible in terms of their connections to secure the communication, You are able to have control over which component is being used, which version of a component is being used, and observe the traffic and find out if something goes wrong.

How does Istio work?

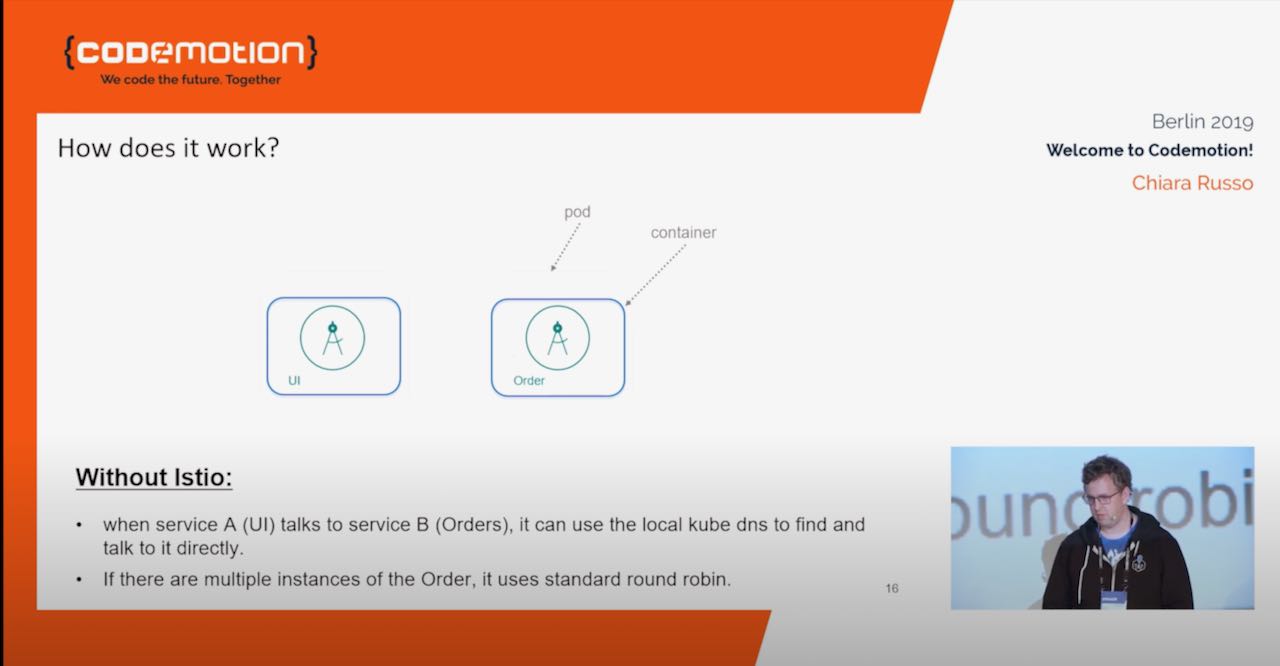

Michael explains: “In a very simplified fashion, you have two microservices. For example, one implementing the UI the other one implementing some ordering system. Each of them runs as containers in a pod on Kubernetes. So, without a service mesh, you just have them talking one to one with each other. You can use the local Kube DNS in order to help them discover each other but that’s pretty much all you have.

If you introduce something like a service mesh, you basically introduce a little additional component on each end of that architecture, like each microservice has such a sidecar deployed (such as Envoy). It basically intercepts all the traffic going in and out. And by intercepting all the traffic, it gives you a control point where you can enforce all those capabilities that I described before.”

Introducing KNative to cloud computing

KNative is an opinionated and simplified view of application management. It’s heavily influenced by serverless. Michael shares “So it does things like scale to zero, scale from zero and so on. It allows the developer to focus on coding which is our ultimate goal. It’s natively integrated in Kube with Kubernetes extension resources (CRDs), so it’s very seamlessly integrating with anything that is done withKube these days.

There are two KNative subprojects:

Serving

Run serverless containers on Kubernetes with ease, Knative takes care of the details of networking, autoscaling (even to zero), and revision tracking. You just have to focus on your core logic.

Eventing

Universal subscription, delivery, and management of events. Build modern apps by attaching compute to a data stream with declarative event connectivity and developer-friendly object model.

The benefits of Knative Services

According to Michael: “What is so nice about Knative service is you can basically run everything that talks HTTP on a port, which is a lot of what we are running these days. It has a multi-threaded model so you can have the puristic serverless behaviour of one thread one process one container, or you can have it in a multi-threaded way.

WYou can have the ability to specify a minimum number of instances that should always be running. It has an auto-scaling capability built-in that scales by the number of requests.

We basically have a platform that allows us to run native containers, it allows us to run services, functions, 12-factor apps, all of that spectrum of components we see primarily in today’s microservices architecture, in a seamless fashion.