The expansion of artificial intelligence (AI) relies on trust. Users will reject machine learning (ML) systems they cannot trust. We will not trust decisions made by models that do not provide clear explanations. An AI system must provide clear explanations, or it will gradually become obsolete.

This article is an excerpt from the book Hands-on Explainable AI (XAI) with Python, by Denis Rothman – a comprehensive guide to resolving the black box models in AI applications to make them fair, trustworthy, and secure. The book not only covers the basic principles and tools to deploy Explainable AI (XAI) into your apps and reporting interfaces, but also enables readers to work with specific hands-on machine learning Python projects that are strategically arranged to enhance their grasp of AI results analysis.

Local Interpretable Model-agnostic Explanations (LIME)’s approach aims at reducing the distance between AI and humans. LIME is people oriented like SHAP and WIT. LIME focuses on two main areas: trusting a model and trusting a prediction. LIME provides a unique explainable AI (XAI) algorithm that interprets predictions locally.

Introducing LIME

LIME stands for Local Interpretable Model-Agnostic Explanations. LIME explanations can help a user trust an AI system. A machine learning model often trains at least 100 features to reach a prediction. Showing all these features in an interface makes it nearly impossible for a user to analyze the result visually.

While learning Microsoft Azure Machine Learning Model Interpretability with SHAP, we used SHAP to calculate the marginal contribution of a feature to the model and for a given prediction. The Shapley value of a feature represents its contribution to one or several sets of features. LIME has a different approach.

LIME wants to find out whether a model is locally faithful regardless of the model. Local fidelity verifies how a model represents the features around a prediction. Local fidelity might not fit the model globally, but it explains how the prediction was made. In the same way, a global explanation of the model might not explain a local prediction.

For example, we helped a doctor conclude that a patient was infected by the West Nile virus in previous chapters in the book.

Our global feature set was as follows:

Features = {colored sputum, cough, fever, headache, days, france, chicago, class}

Our global assumption was that the following main features led to the conclusion with a high probability value that the patient was infected with the West Nile virus:

Features = {bad cough, high fever, bad headache, many days, chicago=true}

Our predictions relied on that ground truth.

But was this global ground truth always verified locally? What if a prediction was true for the West Nile virus with a slightly different set of features—how could we explain that locally?

LIME will explore the local vicinity of a prediction to explain it and analyze its local fidelity.

For example, suppose a prediction was a true positive for the West Nile virus but not precisely for the same reasons as our global model. LIME will search the vicinity of the instance of the prediction to explain the decision the model made.

In this case, LIME could find a high probability of the following features:

Local explanation = {high fever, mild sputum, mild headache, chicago=true}

We know that a patient with a high fever with a headache that was in Chicago points to the West Nile virus. The presence of chills might provide an explanation as well, although the global model had a different view. Locally, the prediction is faithful, although globally, our model‘s output was positive when many days and bad cough were present, and mild sputum was absent.

The prediction is thus locally faithful to the model. It has inherited the global feature attributes that LIME will detect and explain locally.

In this example, LIME did not take the model into account. LIME used the global features involved in a prediction and the local instance of the prediction to explain the output. In that sense, LIME is a model-agnostic model explainer.

We now have an intuitive understanding of what Local Interpretable Model-Agnostic Explanations are. We can now formalize LIME mathematically.

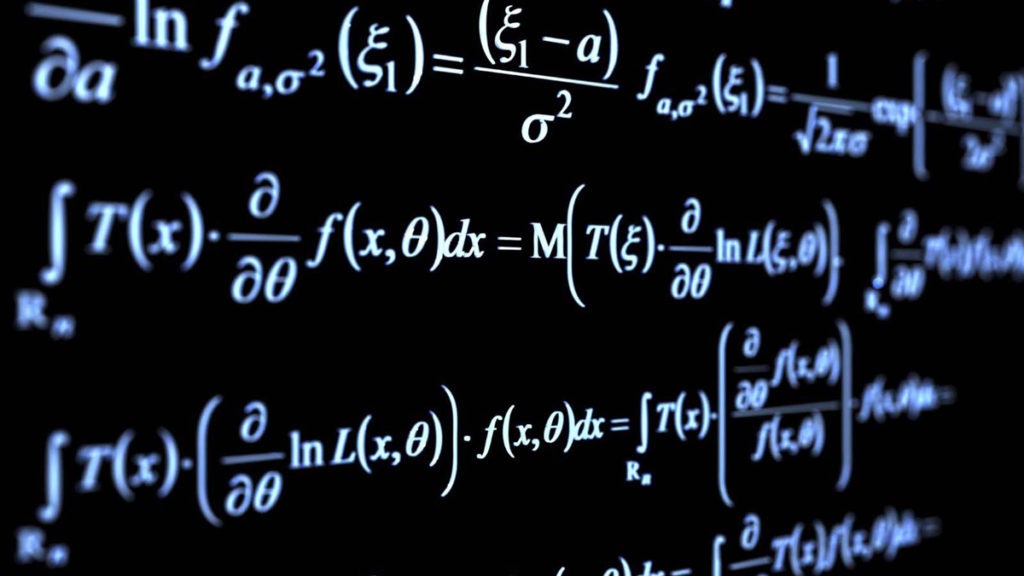

A mathematical representation of LIME

In this section, we will translate our intuitive understanding of LIME into a mathematical expression of LIME.

The original, global representation of an instance x can be represented as follows:

x ∈ Rd

However, an interpretable representation of an instance is a binary vector:

x ∈ {0, 1} d

The interpretable representation determines the local presence or absence of a feature or several features.

Let’s now consider the model-agnostic property of LIME. g represents a machine learning model. G represents a set of models containing g among other models:

g ∈ G

As such, LIME’s algorithm will interpret any other model in the same manner.

The domain of g being a binary vector, we can represent it as follows:

g, {0, 1} dn

Now, we face a difficult problem! The complexity of g ∈ G might create difficulties in analyzing the vicinity of an instance. We must take this factor into account. We will note the complexity of an interpretation of a model as follows:

Ω(g)

We encountered such a complexity program in one of our previous chapters, White Box XAI for AI Bias and Ethics, when interpreting decision trees. When we began to explain the structure of a decision tree, we found that “the default output of a default decision tree structure challenges a user‘s ability to understand the algorithm.” In that situation, we fine-tuned the decision tree’s parameters to limit the complexity of the output to explain.

We thus need to measure the complexity of a model with Ω(g). High model complexity can hinder AI explanations and interpretations of a probability function f(x). Ω(g) must be reasonably low enough for humans to be able to interpret a prediction.

f(x) defines the probability that x belongs to the binary vector we defined previously, as follows:

x’ ∈ {0, 1}d’

The model can thus also be defined as follows:

f = Rd → R

Now, we need to add an instance z to see how x fits in this instance. We must measure the locality around x. For example, consider the features (in bold) in the two following sentences:

- Sentence 1: We danced all night; it was like in the movie Saturday Night Fever, or that movie called Chicago with that murder scene that sent chills down my spine. I listened to music for days when I was a teenager.

- Sentence 2: Doctor, when I was in Chicago, I hardly noticed a mosquito bit me, then when I got back to France, I came up with a fever.

Sentence 2 leads to a West Nile virus diagnosis. However, a model g could predict that Sentence 1 is a true positive as well. The same could be said of the following Sentence 3:

- Sentence 3: I just got back from Italy, have a bad fever, and difficulty breathing.

Sentence 3 leads to a COVID-19 diagnosis that could be a false positive or not. I will not elaborate while in isolation in France at the time this book is in writing. It would not be ethical to make mathematical models for educational purposes in such difficult times for hundreds of millions of us around the world.

In any case, we can see the importance of a proximity measurement between an instance z and the locality around x. We will define this proximity measurement as follows:

∏x(z)

We now have all the variables we need to define LIME, except one important one. How faithful is this prediction to the global ground truth of our model? Can we explain why it is trustworthy? The answer to our question will be to determine how unfaithful a model g can be when calculating f in the locality ∏x.

For all we know, a prediction could be a false positive! Or the prediction could be a false negative! Or even worse, the prediction could be a true positive or negative for the wrong reasons. This shows how unfaithful g can be.

We will measure unfaithfulness with the letter L.

L(f, g, ∏x) will measure how unfaithful g is when making approximations of f in the locality we defined as ∏x.

We must minimize unfaithfulness with L(f, g, ∏x) and find how to keep the complexity Ω(g) as low as possible.

Finally, we can define an explanation E generated by LIME as follows:

E(x) = L(f, g, ∏x) + Ω(g)

LIME will draw samples weighted by ∏x to optimize the equation to produce the best interpretation and explanations E(x) regardless of the model implemented.

LIME can be applied to various models, fidelity functions, and complexity measures. However, LIME’s approach will follow the method we have defined.

For more on LIME theory, consult the References section at the end of this chapter.

We can now get started with this intuitive view and mathematical representation of LIME in mind!

Getting started with LIME

In this section, we will install LIME using LIME.ipynb, a Jupyter Notebook on Google Colaboratory. We will then retrieve the 20 newsgroups dataset from sklearn.datasets.

We will read the dataset and vectorize it.

The process is a standard scikit-learn approach that you can save and use as a template for other projects in which you implement other scikit-learn models.

We have already used this process in a previous chapter, Microsoft Azure Machine Learning Model Interpretability with SHAP. In this chapter, we will not examine the dataset from an ethical perspective. I chose one with no ethical ambiguity.

We will directly install LIME, then import and vectorize the dataset.

Let’s now start by installing LIME on Google Colaboratory.

Installing LIME on Google Colaboratory

Open LIME.ipynb. We will be using LIME.ipynb throughout this chapter. The first cell contains the installation command:

# @title Installing LIME

try:

import lime

except:

print("Installing LIME")

!pip install limeCode language: CSS (css)The try/except will try to import lime. If lime is not installed, the program will crash. In this code, except will trigger !pip install lime.

Google Colaboratory deletes some libraries and modules when you restart it, as well as the current variables of a notebook. We then need to reinstall some packages. This piece of code will make the process seamless.

Summary

In this article, we first got introduced to LIME and how it functions. Once we covered the fundamentals, we further understood how LIME is represented mathematically. Finally, we concluded by learning how to begin applying the benefits of LIME in our AI projects.