Nowadays, Natural Language Processing (NLP) is one of the most important fields of research. It has seen a phenomenal rise in interest in the last few years. The basics of NLP are widely known and easy to master, but things start to get complex when the text corpus becomes huge. That’s where deep learning comes in help. In the last decade, deep learning has proven its usefulness in computer vision tasks like image detection, classification and segmentation, but NLP applications, like text classification or machine translation, have long been considered fit for traditional machine learning techniques only. That’s not true anymore. And thanks to the amazing fastai library, you can quickly leverage all the most recent findings.

Deep Learning frameworks and NLP

Applying deep learning to text is relatively new, with the first amazing state-of-the-art results dating back to late 2017. and some breakthrough discoveries and new techniques have been introduced in 2018, marking the ImageNet moment for NLP, as defined by NLP researcher Sebastian Ruder. Research is ongoing and very fast-paced, continuously producing new state-of-the-art results almost every month. The long reign of word vectors as NLP’s core representation technique has seen an exciting new line of challengers emerge: Transformer, ELMo, ULMFiT, BERT and Transformer-XL. This is a very interesting moment in time to start exploring, studying and applying Machine Learning to textual data. No way to get bored.

To design and train deep learning neural networks, you can choose between many frameworks, such as PyTorch or TensorFlow, just to name the most famous. If you don’t need to deal with low level details, there are higher level libraries, built on top of them, which provide building blocks to design and train custom neural networks quickly. As Keras is for TensorFlow, there is the fastai library for PyTorch.

The fastai library goal is to make the training of deep neural networks as easy as possible and, at the same time, make it fast and accurate, using modern best practices.

It’s based on research into deep learning best practices undertaken at fast.ai, a San Francisco-based research institute founded and lead by Jeremy Howard and Rachel Thomas.

The library includes “out of the box” support for computer vision task, text and natural language processing, tabular/structured data classification or regression and collaborative filtering models, those at the core of modern recommendation engines.

What you can do with fastai library

NLP with fastai library

The fastai library was the first library to make NLP Deep Learning easy to adopt, with state-of-the-art results back in early 2018, introducing ULMFiT (Universal Language Model Fine-tuning). It mixed various NLP deep learning techniques and machine learning architectures derived from previous attempts and research papers, in a new, quite simple end-to-end pipeline, with two main purposes: to deal with NLP problems where there is not so much annotated data available and/or big computational resources and to make NLP classification task easier.

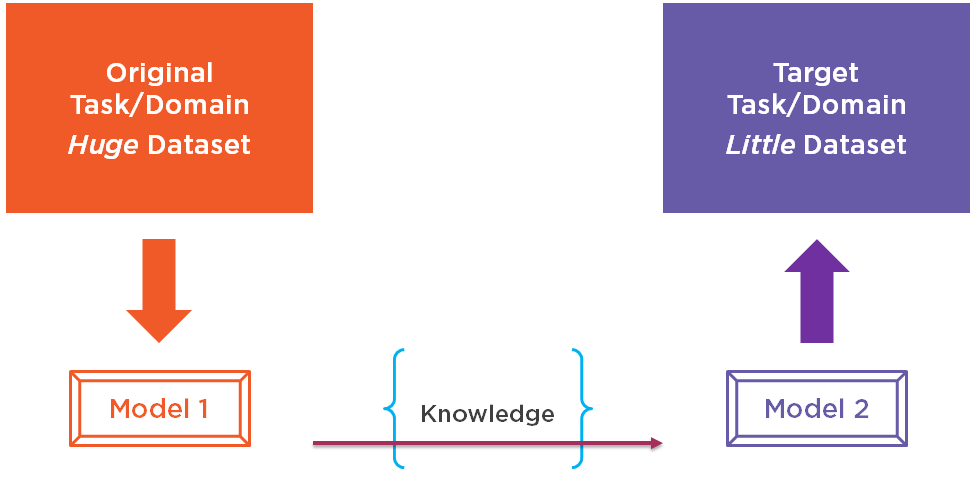

At the core, they have been able to successfully implement transfer learning and fine-tuning for textual data. Transfer learning is a machine learning method where a model developed for a task is reused as the starting point for a model on a second task. Until a few years ago, it has been, and still is, a popular approach to deep learning for computer vision tasks, where pre-trained models, such as ImageNet or Microsoft COCO, are used as the starting point, given the vast compute and time resources required to develop neural network models on these problems and from the huge jumps in skill and accuracy that they provide on related, more domain-specific problems. One common way to do this is by fine-tuning the original model. Because the fine-tuned model doesn’t have to learn from scratch, it can generally reach higher accuracy with much less data and computation time than models that don’t use transfer learning. Until then, the problem to apply this technique to textual data was that there was a lack of knowledge of how to train pre-trained models effectively and this has been hindering wider adoption. This idea has been tried before, but required millions of documents for adequate performance. fast.ai researchers applied a variant of Stephen Merity’s Language Model (Salesforce Research), combined with other training and regularisation strategies, obtaining practical transfer learning for text applications.

Transfer learning

In general, a language model is an NLP model which learns to predict the next word in a sentence. It seems a trivial task, but this is very important, because for a language model to be good at guessing what someone says next, it needs a lot of world knowledge and a deep understanding of grammar, semantics and other elements of natural language. This is exactly the kind of knowledge that we, as humans, leverage implicitly when we read and classify a document. Once a language model is trained on a very big text corpus, for example Wikipedia (as done by the fast.ai team, making it available for everyone to use), we can then adapt it to perform other tasks, such as classifying a document, or estimating the sentiment of a phrase.

As previously stated, the fastai library provides some high-level APIs and objects to quickly and easily train models on your own datasets, quite similar for each supported data type (images, text, tabular data or collaborative filters).

Without entering in the details, for example, these few lines of code taken from the fastai library documentation let you train a text classifier (sentiment predictor) in few minutes, with a good level of accuracy, even if your dataset is not so big, thanks to transfer learning and the pre-trained English model based on Wikipedia.

In addition, to provide all the best practice and techniques for deep learning NLP, the library provides text pre-processing modules for ingesting text in neural networks, such as tokenisation (based on the amazing spaCy library, but still totally customisable, if needed) and numericalisation – mapping words to unique ids. So, you only need to prepare your text datasets according to the task you want to perform, load them in a DataBunch and train your model. That’s all!

Additional Resources

To get started with the fastai library, the most useful resource is its documentation.

For an in-depth general introduction to deep learning, you can follow the official fast.ai free MOOCs: they are amazing practical courses for coders, taught in a “top-down” approach: Jeremy Howard starts from hands-on real-world examples, and then explores the theory behind each technique, developing some of the most recent research papers with PyTorch from scratch.

- Practical Deep Learning for Coders

- Cutting Edge Deep Learning for Coders

- Introduction to Machine Learning for Coders

- Computational Linear Algebra

Instead, if you wish to know more about NLP deep learning processing with fastai, with examples and code to bring your models to production, or how to train your own language models, here you can find my new Pluralsight course on the topic.